By Kayt Sukel

As the images of devastation continue to come out of Turkey and northern Syria, it is clear that earthquakes can result in formidable damage with the power to haunt affected areas for years to come. In order to make cities in earthquake-prone regions more resilient, engineers rely on simulation models to help predict the potential effects of seismic activity to buildings and other key pieces of community infrastructure. Such models can inform structural engineering, architectural retrofitting, and earthquake recovery — yet the development of such data-heavy, physics-based models has, to date, been limited by the availability of supercomputing time to run them.

Now, scientists from the Lawrence Berkeley National Laboratory, also known as Berkeley Lab, and the Lawrence Livermore National Laboratory have developed a new simulation software, EQSIM, and announced that they will adapt and release the resulting data sets to the public through the Pacific Earthquake Engineering Research Center.

Khalid Mosalam, Ph.D., the Taisei Professor of Civil Engineering at the University of California, Berkeley and the director of PEER, says that the EQSIM data sets can help earthquake and structural engineers design and develop more earthquake-resistant structures in the future across the greater San Francisco region. Equally as important, such models can help community stakeholders better understand community safety and resilience in those areas.

“With EQSIM, you can generate motion for any characteristics you desire for a specific region in the Bay Area and look at the performance of structures in that area subjected to that motion,” Mosalam says. “That can help us understand the potential risks to our infrastructure systems and inform decision-making about how to build more resilient cities in the future.”

The power of EQSIM

Today, all buildings and infrastructure in the Bay Area require some sort of seismic evaluation, thanks to being within both the Hayward and San Andreas fault zones. Typically, that analysis is conducted using historical data, says David McCallen, Ph.D., the EQSIM project lead who also leads the critical infrastructure initiative for the Energy Geosciences Division’s resilient energy, water, and infrastructure program at Berkeley Lab.

“The challenge has long been that earthquake data is very, very sparse,” he explains. “We have very little data in what’s called the ‘near field’ of large magnitude earthquake sites, which is within 20 to 30 km of where big earthquakes have happened.”

Because of the lack of data, most structural and earthquake engineers have relied on data from earthquakes around the world — “homogenizing” the data to try to predict the seismic effects as they assess existing or build new infrastructure elements within their own communities. Yet, McCallen says, such models lack the ability to account for site-specific needs.

“Site-specific motions are very dependent on the local geology — it affects the way the fault ruptures and the way seismic waves propagate,” he explains.

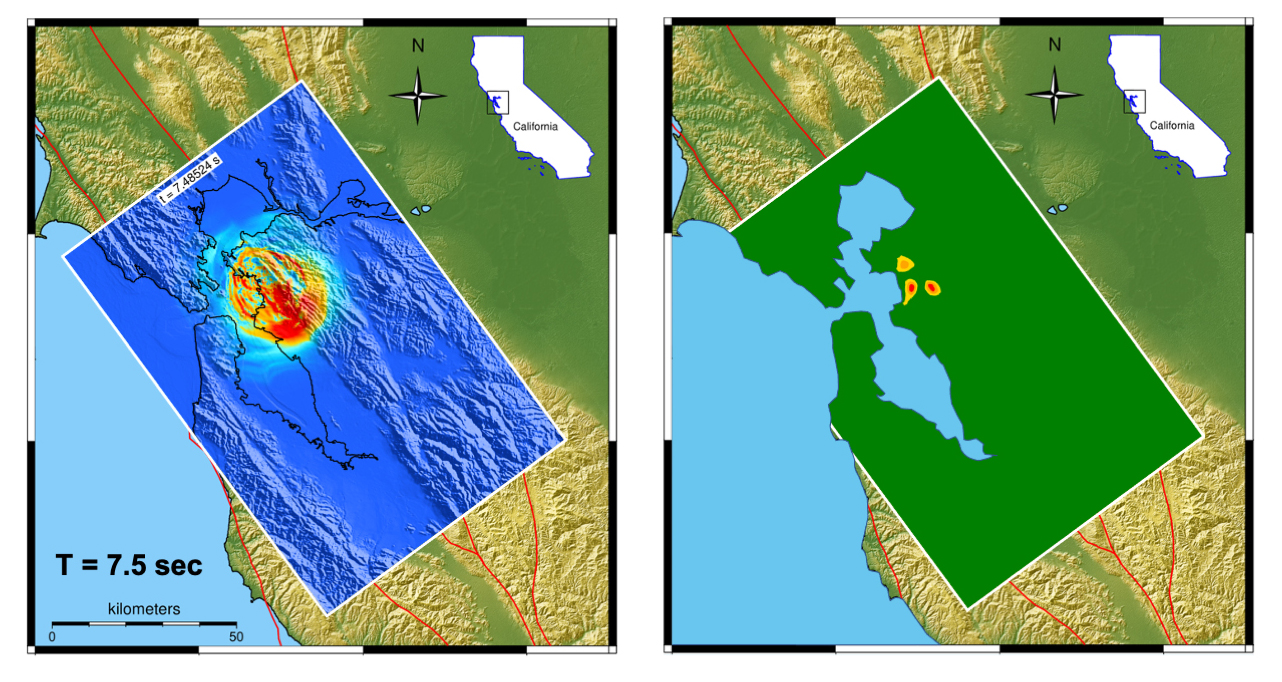

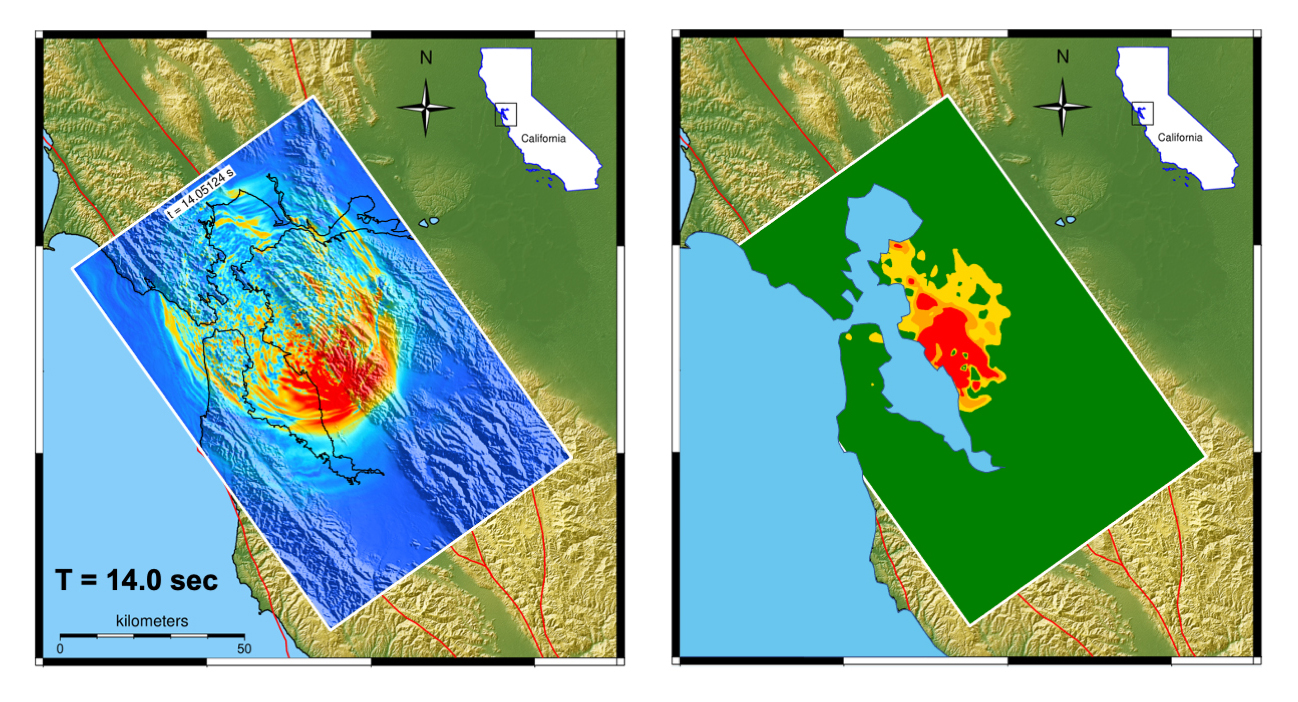

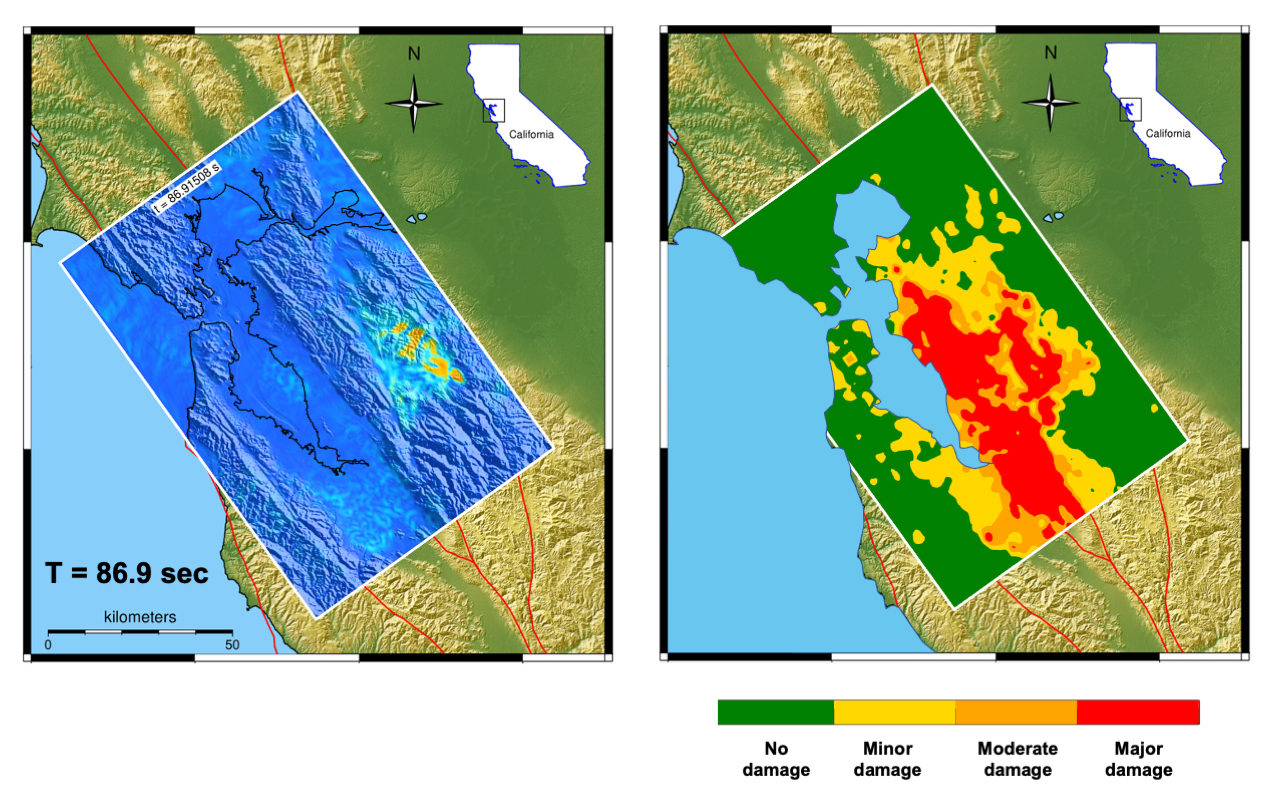

He and his team have spent the past six years developing a simulation model that could more accurately reflect those site-specific motions, with ground motions predicted within the computational model at approximately every 2 m across the Bay Area. In doing so, the resulting simulation, EQSIM, can generate “unprecedented fidelity and spatial coverage” for the region, according to McCallen.

“We modeled the geophysics with a wave propagation code,” he says. “We modeled the local soil, the structural response with an engineering code, and we’ve got all these models connected together across 391 billion grid points located across the Bay Area.”

Open access to data

Such a comprehensive model requires supercomputing power to execute. The EQSIM team used the Summit Supercomputer at Oak Ridge National Laboratory and the National Energy Research Scientific Computing Center’s Perlmutter system at Berkeley Lab to build and test the original model. They have been awarded computing time at the Department of Energy’s newest supercomputer, ORNL’s Frontier — the world’s first exascale computer — to further enhance the simulation.

“Using Frontier, we ran a model that was 1.2 trillion grid points — it gives you a sense of the size of these models,” McCallen says.

While the project originally focused on overcoming the computational barriers involved in the development of site-specific earthquake simulations, EQSIM is transitioning into finding ways to apply the models to real-world problems. That’s where PEER comes in. The consortium of 20 universities develops, maintains, and updates databases that house recorded motions from real earthquakes. It will add the EQSIM database to its library.

“We get the data and put it in a standard format so that researchers and practitioners can make use of it in different engineering projects, assessing existing infrastructure or designing new structures,” Mosalam says. “This will give them realistic, site-specific motion that they can use in their own simulations and designs.”

Barbara G. Simpson, Ph.D., an assistant professor of civil and environmental engineering at Stanford University who is affiliated with its Blume Earthquake Engineering Center, says access to the data will provide researchers like her the ability to test how structures respond to seismic events.

“Many engineers are interested in localized phenomena,” she says. “With this data, you can ask: What happens to structures near the fault — or at least the faults that we know of — and are those structures behaving differently? We can also start to consider, using this data, how can communities recover after an earthquake and how to plan disaster response.”

Mosalam says PEER hopes to have a beta version of the database available within a year or so. And, as it does with its other databases, PEER will continue to refine the offering based on user feedback.

“This will be several orders of magnitude larger than any existing ground motion database,” he says. “It’s going to be transformative for regional scale modeling.”

Garbage in, garbage out

While McCallen is excited about what structural, civil, and earthquake engineers will be able to do with EQSIM data, he cautions that any model is only as good as the data that go into it. While EQSIM is the most comprehensive, site-specific model to date, he acknowledges some of the geologic data are not as good as he would like.

“We remain challenged by our current understanding of the area’s geology,” he says. “There are some areas where we don’t have great information about the geology, and that raises uncertainty. But organizations like the United States Geological Survey and others are working on producing refined, detailed geologic models, and that will help us improve over time.”

Despite that uncertainty, Simpson said the EQSIM data not only will help engineers better design and retrofit buildings in the Bay Area, they will also allow them to investigate how to better support infrastructure lifelines affected by earthquakes in the region.

“If we can model the buildings and the building damage, then we can start to think about what those buildings are and how to trace things like electric power networks, potable water, wastewater, energy system, and even transportation system lifelines,” she says. “You can start to look at these rippling effects that are interdependent and need to be assessed on a regional scale. That can help us, ultimately, make our communities more resilient and improve our ability to support recovery after an earthquake.”

This article first appeared in Civil Engineering Online.