University of Missouri

University of Missouri “I believe all of us working in transportation have one shared goal – to improve safety,” said Linlin Zhang, Ph.D., a civil engineering postdoc at the University of Missouri. “The key part is understanding how traffic behaves on the road.”

Traditional methods of analyzing traffic activity, like manual counting, are time-consuming, labor intensive, and susceptible to human error. Cameras alone can provide accurate counts but don’t do a great job calculating vehicle speed. Consequently, more traffic engineers are looking to lidar, which can provide more accurate and comprehensive spatial information.

Further reading:

- Why 3D scanning could be wave of future for bridge inspection

- In a changing world, better flood modeling informs designs that stand up

- A traffic engineer’s charge to aspiring engineers: Be curious

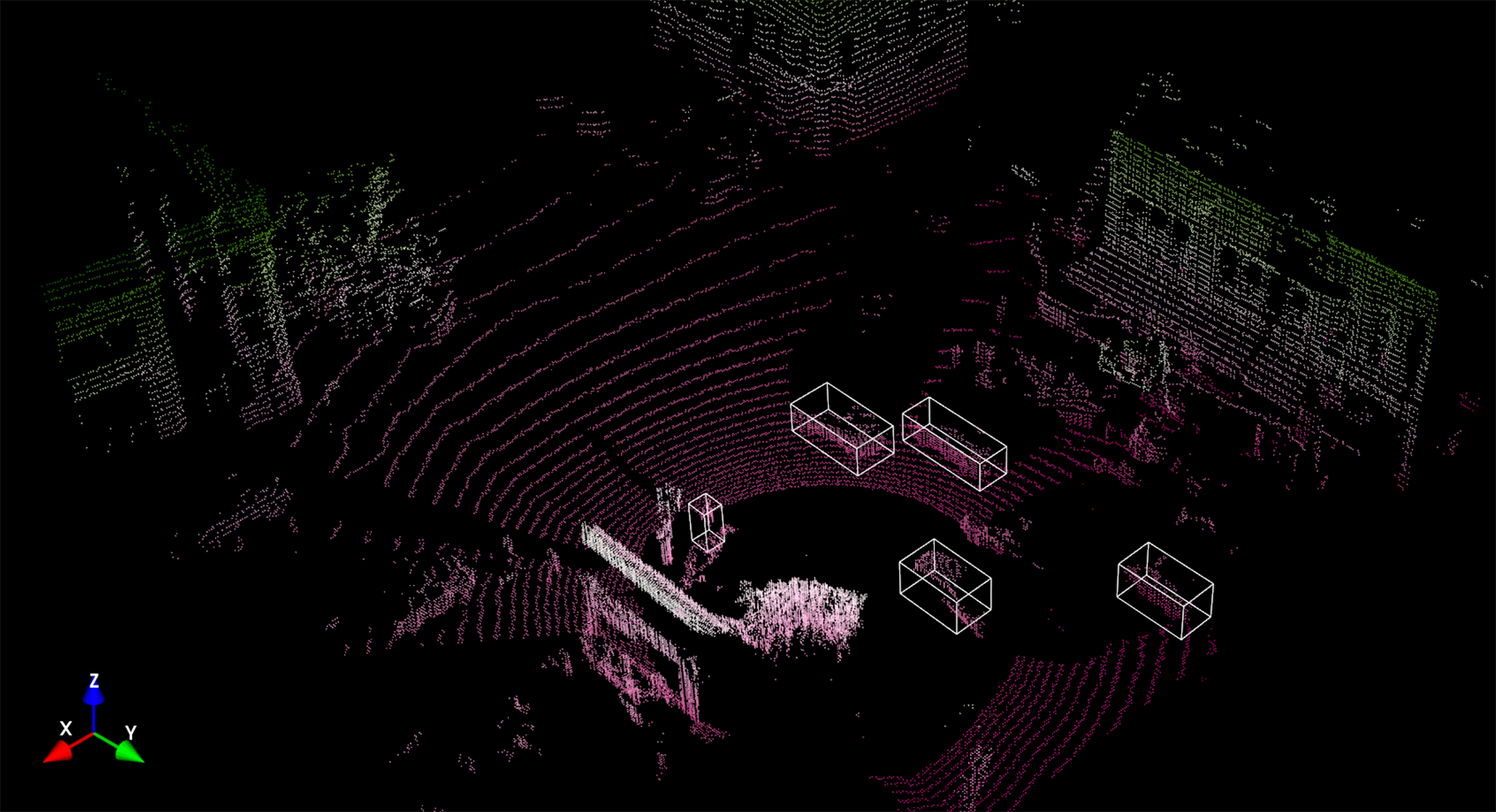

But lidar sensors have traditionally been quite expensive, and traffic analysis often requires multiple sensors to address occlusion issues. Plus, it’s time intensive for engineers to indicate the 3D “bounding boxes” around moving objects that allow computer systems to track and analyze them.

“While cameras are useful for basic vehicle counts, they rely on good lighting and, being mostly monocular, cannot directly capture depth,” Zhang said. “As a result, speed can only be estimated rather than precisely measured. Stereo cameras could solve this, but they are rarely used in roadside surveillance.”

Maximizing the technology

Lidar sensors have become more affordable over the last several years, which has opened new avenues of research that could yield real safety improvements on roads.

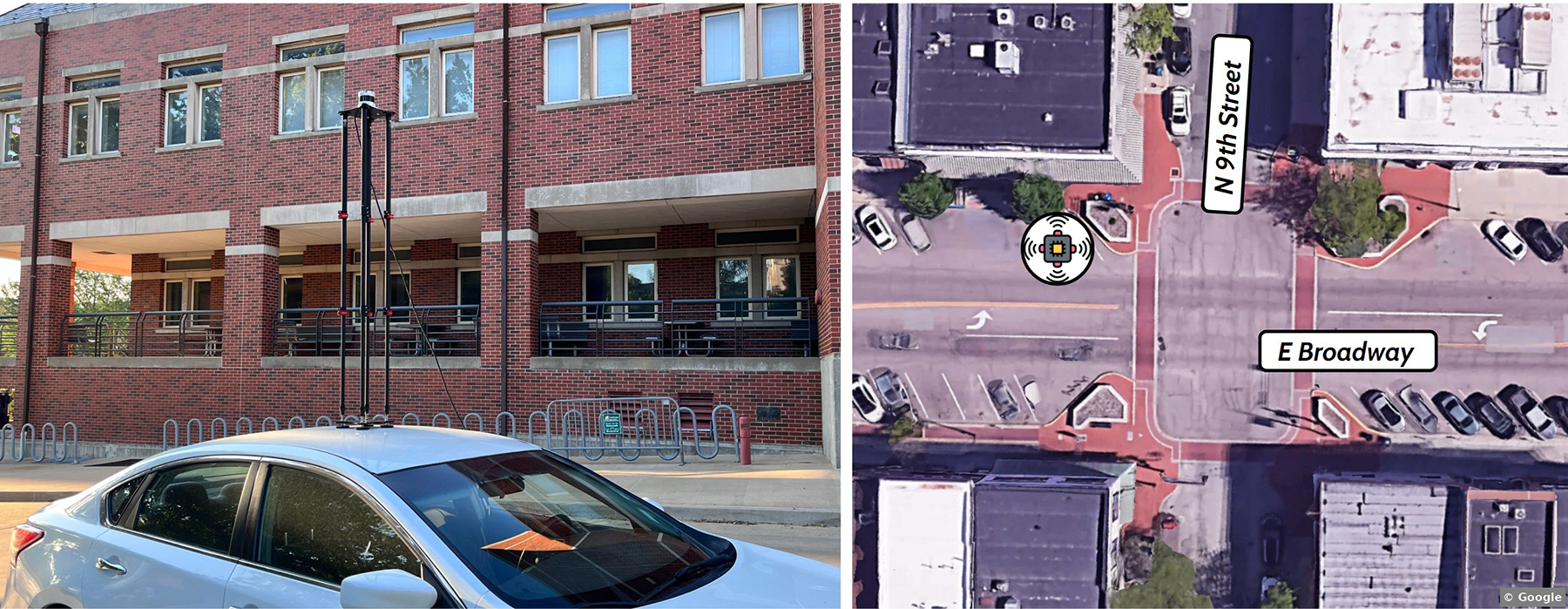

Zhang’s research, which took place in summer 2023, in collaboration with Yaw Adu-Gyamfi, Ph.D., an associate professor in civil engineering at the University of Missouri, included mounting a 64-channel lidar sensor atop a vehicle on a downtown street in Columbia, Missouri.

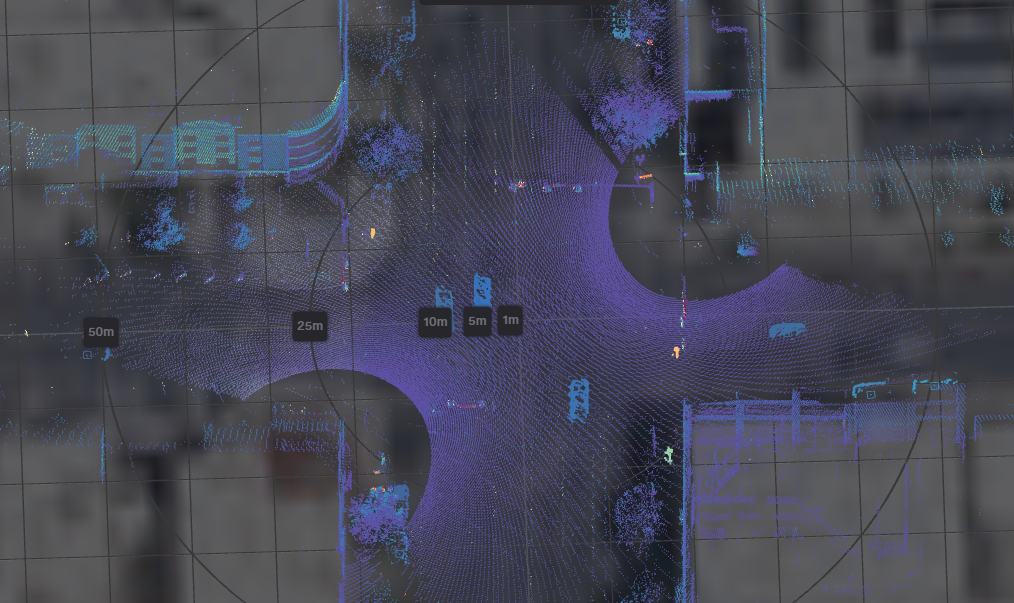

She converted the data collected – a 3D point cloud – into a 2D bird’s-eye view image of the intersection. She then built a framework that combined a point cloud completion framework she developed along with a Segment Anything Model, developed by Meta, plus other computer-vision techniques such as background subtraction – to allow the lidar sensor to study object height, acceleration, and speed without having to create a box manually for each object.

University of Missouri

University of Missouri

Meta’s “zero-shot learning” detects vehicles and pedestrians that were not included in the datasets that train machine learning models.

“Zero shot means you can use a pretrained model directly, without needing to collect data or train it yourself,” Zhang said. “It makes the process much faster and could be really helpful for real-time traffic monitoring in the future.”

In April 2024, Zhang and Adu-Gyamfi used lidar for another research project in Columbia, which grew out of trying to count movements at intersections.“Before we time a signal, we want to know how many people are going through from each direction, how many people are making left (turns), and then we use that information to give the appropriate green time for each movement,” Adu-Gyamfi said. “So the direction that has more vehicles, you want to give them more green time and all that.”

The researchers recorded the initial data with a camera at a busy intersection near MU’s football stadium. As they analyzed the data, they noticed several near-misses, but the camera was having a hard time classifying them because cars farther away were smaller. The AI model they were using would assume there were near-misses because it couldn’t clearly “see” what was going on.

So they studied the intersection for a week with both a camera and lidar – which allowed them to detect and classify every object coming into the intersection, including cars, bikes, and people.

“We are tracking a vehicle,” said Adu-Gyamfi. “And then we predict: This vehicle in the next three seconds will be here. This person in the next three seconds will be there. If their path crosses that’s a near-miss.”

The future is close

In 2023, 40,990 people died in roadway accidents. Last year, the Federal Highway Administration announced $60 million in grants to transit agencies in Arizona, Texas, and Utah under its Saving Lives with Connectivity: Accelerating V2X Deployment program, meant to advance connected and interoperable vehicle technologies.

The idea is to move us closer to the deployment of V2X, or “vehicle-to-everything” technologies that can allow street infrastructure to talk to vehicles. The hope is that technologies that enable vehicles and infrastructure to communicate can help the U.S. achieve zero traffic fatalities.

“Lidar is the real deal,” says Mark Taylor, P.E., PTOE, a traffic signal operations engineer with the Utah Department of Transportation. “The actual technology has evolved enough where it’s very accurate with what it does. The cost has come down.”

UDOT has deployed lidar at about 20 intersections; Taylor says lidar can see more of the activity at an intersection than technologies like video or radar. “It is seeing all of the lanes of the intersection and everything within a few hundred feet of it,” he says. “So you are seeing a lot more information.”

Utah Department of Transportation

Utah Department of Transportation

Lidar can detect vehicle speed and rates of acceleration and deceleration. It can detect pedestrian walking speed. For one, this means that crosswalk signals can be retimed on the fly to give a little more time to pedestrians who need it. Taylor says this is already happening on lidar-equipped intersections.

A further goal would be to broadcast the location of a pedestrian in a crosswalk to nearby cars, which can then alert drivers if a collision is imminent.

“We are putting the technology out there hoping to entice the vehicle manufacturers,” he says. But in the meantime, Taylor added, “we are using that technology right now in our snowplows and on our buses and on some of our vehicles to already get those safety features.”

With its federal grant, UDOT has installed V2X technology at half its traffic lights; the rest will be upgraded by the end of next year.

As lidar helps traffic planners better see complex interactions between pedestrians, cyclists, and drivers, new computer models may help better predict the likelihood of crashes, which will be crucial when driverless cars gain traction.

“Can we understand how pedestrians and drivers behave at intersections, so we can teach the self-driving cars how we behave?” said Adu-Gyamfi. “And that will definitely help with of course the rolling out of all these self-driving cars.”