Artificial intelligence is changing the civil engineering game in many ways.

From traffic management to infrastructure inspection and predictive maintenance, AI offers capabilities that can transform the way civil engineers do their jobs. But it isn’t without pitfalls.

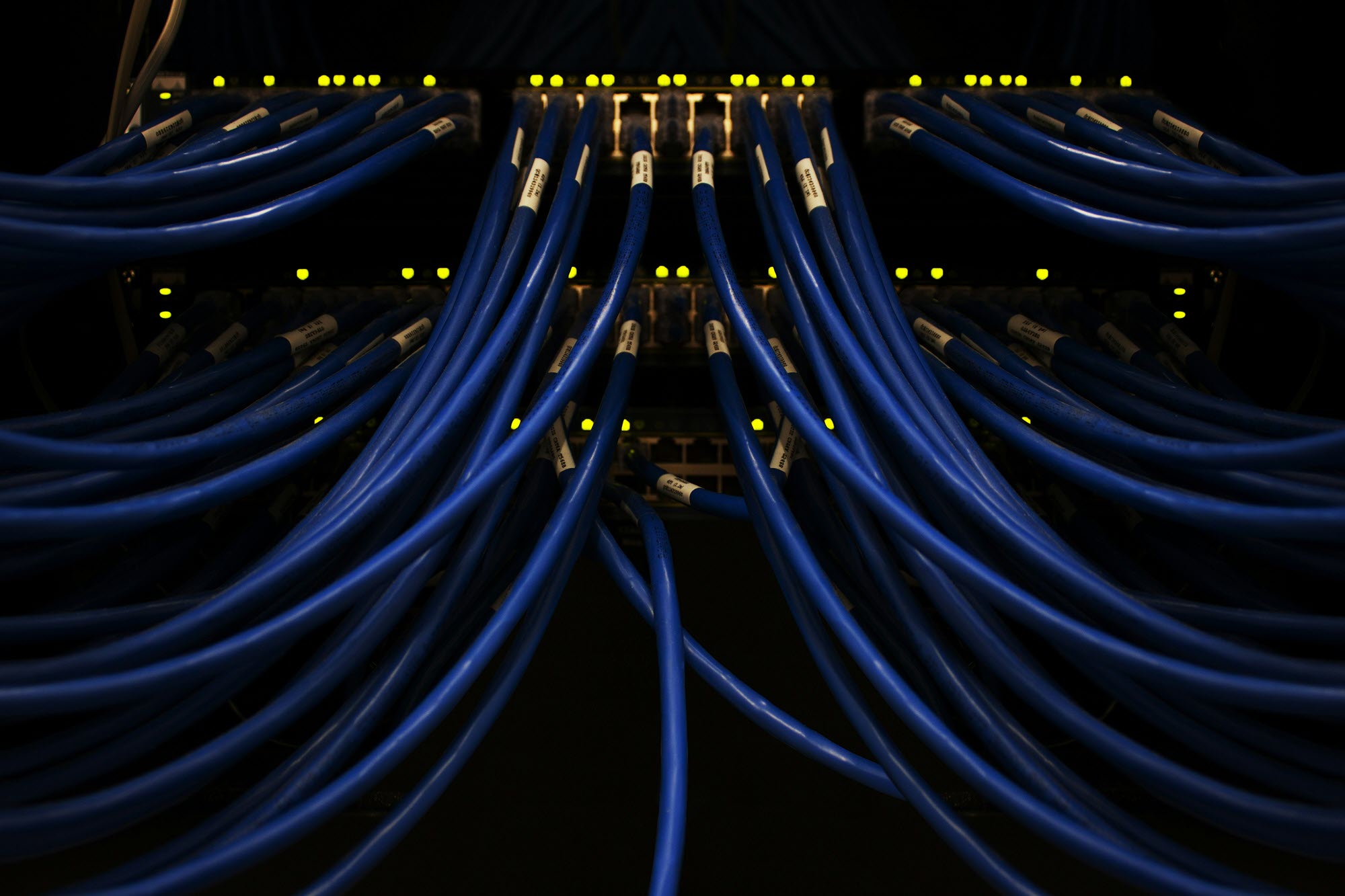

The digitization of information requires massive amounts of energy, and the data centers necessary to support AI technology present environmental challenges. There are also issues with unreliable information that still need to be solved.

A recent Thursdays@3 discussion brought civil engineers together to discuss where AI is going in civil engineering. Find out below what they have to say about balancing the positives and negatives of AI.

Mikhail Chester

Director, Metis Center for Infrastructure and Sustainable Engineering at Arizona State University; Tempe, Arizona

“Right now, there's so much attention and resources being pumped into AI data centers as the next engine of innovation, manufacturing, and knowledge services in the U.S. But you simply can't have these things without the underlying services that civil engineers provide, like safe and reliable water or electricity.

“And there’s potentially vicious feedback: You put in a data center, you don't make the investments in the aged and already fragile infrastructures that underpin these systems, and you break them. And who's going to suffer? Who's going to pay for that? We haven't yet tackled that question.

“I think as civil engineers, we have not made it clear that these systems simply don't just work on their own without continued investment in financing, resources, and knowledge to ensure that we can modernize infrastructure to support datacenters and the AI boom. These investments need to be front and center in discussions of how we make sense of this problem.”

Nalah Williams

Owner of Golden Mane Consulting; Dallas–Fort Worth, Texas

“Texas is already concerned because we've had issues with our power grid in the past, especially during some cold weather where it pretty much shut down. And now we're adding several data centers which will pull the load on that same power grid that they haven't really reinforced since it shut down.

“There is definitely a big concern for everyone in these data centers. The companies have already bought the land and they're going to start construction very soon. I think from an engineering standpoint, as far as ethics are concerned, solutions have to be created right now. I don't know that the benefit we're getting from AI outweighs the damage it's also doing to the environment. I personally think that needs to be mitigated.

“Now, whether that comes from whoever is designing the data centers or whoever's running those companies, there has to be an offset for the usage that's happening because the funny cat videos are likely getting created more than what we're using for infrastructure data. So, is that really outweighing the damage?”

Navid Salami Pargoo

Research thrust manager at the Center for Smart Streetscapes; New York City

“Something that is important for everyone, no matter what the context is, is to educate ourselves on how to use such models and what ethics are actually attached to them. In terms of the usage, specifically in academia, we can see that a lot of the students are very much using these models to come up with final answers. But I try to teach them how to use them, not to get the answer, but to ask the models to teach them to come up with solutions with the underlying knowledge required to answer such questions. It’s at least a little bit better than just copy-pasting the solution; it gets them to think.

“Another side is about ethics, and how we actually disclose if and to what extent we are using such models or such assistance from any kind of generative model. … Right now, in terms of specifically like the ethics of usage, the International Conference on Machine Learning are asking older reviewers to either say they use AI within for their reviews or use no AI at all. …

“I believe we should raise a little bit of awareness about the ethics. If you want to use AI, you have to know how to use it and to what extent to use it. It's not like it gives you the best answer. It is a kind of assistance like drivers’ assistance. It's not autopilot. It doesn't drive by itself. You have to take full control and responsibility for it. But it is equipped to give you some more context about the things that you might have missed if it wasn't there.”

Jessica Evans

Founder and president of Henrico Pierce Engineered Solutions and construction engineer at WSP in the U.S.; Portsmouth, Virginia

“When it comes to the safeguards that we should be putting in place when we're using AI for our organization or even just to help ourselves manage our workflow, we should be making sure that we have redundancy protocols in place to make sure we know where the data is coming from. A lot of times, it’s just a free-for-all if you don't put those safeguards into place. …

“If you're looking how many times we’ve inspected a bridge and if we have issues with girders between span and such and such, go through your verified data source that is being maintained by your organization. It's being protected by role-based access, so you don't have everyone who has access in your enterprise able to manipulate different data, which I think really helps.

“Also, make sure that you're constantly going through the AI models to see where things may be degrading, where things may be drifting, and listening to your feedback loop to see where you can refresh those redundancy protocols. I think developers can continue bias and security testing to make sure we can get that right. But it also starts with how you're using it in your organizations because the creators of these tools have their own agenda for how they want something or vision for how they want something to be used.”

Explore more Thursday@3 events.

Get ready for ASCE2027

Maybe you have big ideas of your own. Maybe you are looking for the right venue to share those big ideas. Maybe you want to get your big ideas in front of leading big thinkers from across the infrastructure space.

Maybe you should share your big ideas at ASCE2027: The Infrastructure and Engineering Experience – a first-of-its-kind event bringing together big thinkers from all across the infrastructure space, March 1-5, 2027, in Philadelphia.

The call for content is open now through March 4. Don’t wait. Get started today!